Introduction

Any business of considerable size requires Business Intelligence (BI) to drive decisions and gain insights. Traditional BI tools often involve setting up dashboards, writing complex SQL queries, and integrating multiple data sources. However, these solutions can be difficult to use for those without technical expertise, making it challenging for decision-makers to extract meaningful insights quickly.

What if we could implement an affordable, minimal-setup, and user-friendly BI system directly within nopCommerce, eliminating the need for complex external tools? LLMs may be the answer, and this tutorial will guide you through the process.

Why Use LLMs for BI in nopCommerce?

The foundation of any BI reporting system lies in constructing SQL queries that efficiently retrieve and summarize relevant data. This involves selecting the appropriate tables, filtering the necessary data, and structuring queries to generate meaningful insights. Traditionally required high level SQL skills, Large Language Models (LLMs) offer a new way to simplify BI by allowing non-technical users to query data using natural language.

What We’ll Build

We will develop a system that enables LLM-powered BI in nopCommerce by:

Converting natural language queries into SQL.

Executing the SQL on the nopCommerce database (defaulting to SQL Server).

Displaying results using Tabulator.js.

Demo Application

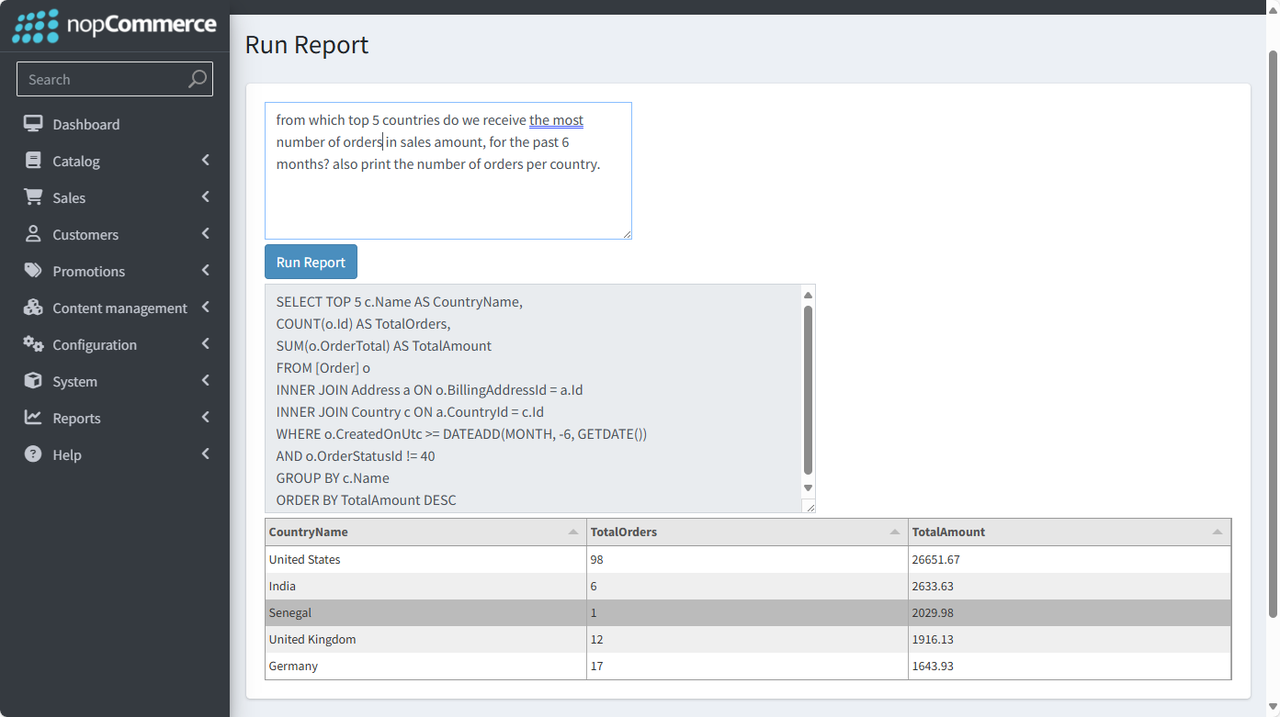

Before diving into the technical details, let's first see the demo application we are going to build.

NOTE: UI is kept to the bare minimum for brevity.

As shown in the following image, we have a simple textbox for entering user queries along with a Run Report button to send the query and generate output.

The output consists of the generated SQL and the data presented in a table format using Tabulator.js. The generated SQL is what the LLM returns to us when we feed it the user query. Our code then takes this SQL and queries the database to get back the data for final display.

Setting Up the Environment

Prerequisites

To follow along, you need:

A development environment for nopCommerce - this tutorial uses nopCommerce 4.80.

A SQL Server database - for simplicity, this tutorial uses the most basic ADO.Net DataReader instead of nopCommerce's default ORM, LinqToDb.

API access to an LLM - OpenAI is chosen for this tutorial due to its popularity, but the concept can be applied to other LLMs with just some slight modification.

Technology Stack

Backend: .NET Core (C#)

Database: SQL Server

Frontend: Tabulator.js

AI Model: OpenAI GPT-4o

Tabulator.js is used in this tutorial for displaying the data. Tabulator.js is chosen simply because I am very familiar with this data grid library. You can replace it with any other data grid library of your choice.

High-level Structure

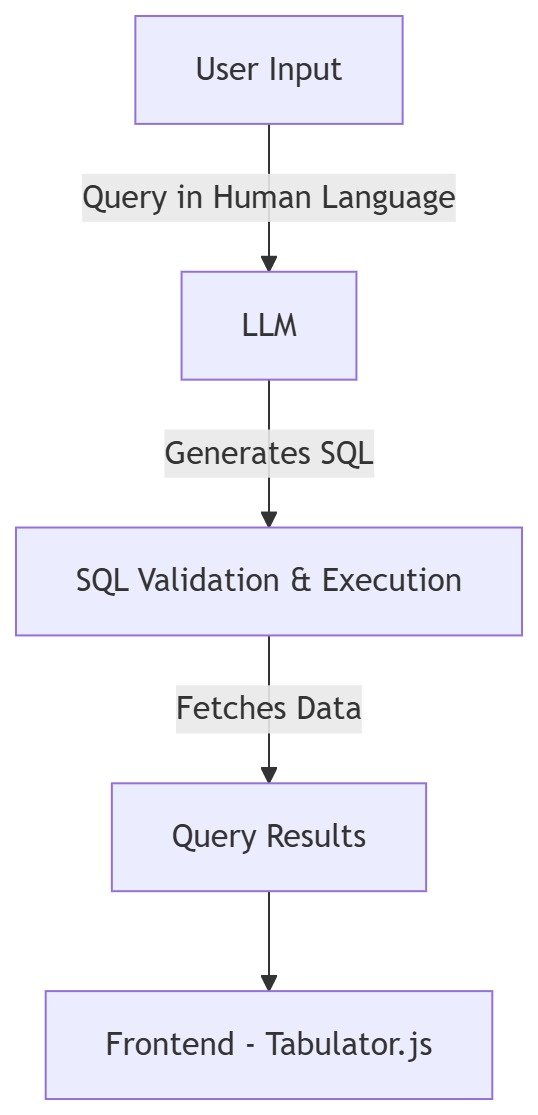

To build an LLM-powered BI tool within nopCommerce, we need to connect multiple components, each playing a crucial role in the process. Below is a breakdown of how each piece interacts:

1) User Query Input

The user enters a business-related question in natural language.

2) LLM Processing

The system retrieves the database schema (in our case, from a pre-generated

.sqlfile).The LLM is prompted with the user’s query and schema details.

The LLM generates a SQL query based on the provided context.

3) SQL Execution

(Optional) The generated SQL query is validated and sanitized to prevent malicious commands.

The query is executed against the SQL Server database, and data is retrieved.

4) Displaying Data

The retrieved data is sent to the frontend.

Tabulator.js dynamically renders the data.

Visualization

To better illustrate the flow, here’s a MermaidJS diagram:

Implementing the "Human-to-SQL" Translator

Now that we understand the high-level concept, it's time to implement the system. As explained in the previous section, an LLM is used to translate users' queries into SQL scripts. Therefore, the success of this app lies in the accuracy of the "Human-to-SQL" translation. Enter "prompt engineering."

Prompt Engineering for SQL Query Generation

If you are new to LLMs, it's important to understand the concept of "prompt engineering" — the practice of crafting input prompts in a way that guides the model to produce accurate and relevant responses. In the context of BI reporting, this means carefully composing a query that provides the LLM with enough context (such as table structures and expected output format) to return a valid and optimized SQL statement.

Example prompt:

Notice how we instructed the LLM to "return only SQL and no additional message nor formatting", so that it's easier for us to extract the SQL query. We don't want the model to include a bunch of descriptive text, explanations, or markdown formatting, as that would complicate parsing and executing the query in our backend. By keeping the prompt strict and focused, we increase the reliability of the output and reduce the chances of misinterpretation or even runtime errors.

Helping LLM Understand nopCommerce’s Database Schema

If you look closely at the prompt mentioned in the previous section, you'll notice that we are supplying LLM with {FULL_DB_INFO}. We need to do this because LLMs do not have enough information about nopCommerce's database schema. We must provide the schema along with additional context. In this tutorial, three types of data are supplied:

Database Schema - provides LLM with information about what tables and fields it can query data.

Foreign Keys - provides LLM with information about how the different tables are linked

Enums - fields like

OrderStatusIdare stored as integers (e.g., 10, 20, 30), but LLMs cannot interpret these values without context. We need to augment them with meaningful labels, such as10 = Pending.

Sample code:

Full code: NopSchema.sql

Calling the OpenAI API from nopCommerce

With all the preparatory work done, it's now time to actually call OpenAI's API from our code.

On the back-end C# side, five main methods are used in this tutorial:

RunReport- this is theAction Methodcalled by the front-end AJAX code upon clicking the "Run Report" buttonGenerateSQLQuery- this function calls the API and returns the resultGetPrompt- this function forms the prompt that will be sent to the APIExtractSQLFromResult- this function extracts the generated SQL from the API responseExecuteSQLQuery- this function executes the generated SQL against the database and returns the data for displaying in the front-end

As you can see, the process is actually quite straightforward. But there is a few notes to take:

In

GetPrompt, we are taking the full database schema. This can be very long, which means more money spent on the API as LLM APIs are typically charged by input and output keywords. Some less important tables can be removed from the database schema sent to LLM, if it's confirmed that those tables will never be queried.In

ExtractSQLFromResult, we did not sanitize the data returned by LLM. Remember in our prompt, we instructed the LLM to not print any additional messages and formatting. We trust that the model will follow the instructions diligently, but it may not always be the case. Some models adhere to instructions more strictly than other models, so you just need to test this out.In

ExecuteSQLQuery, instead LinqToDb which is used by default in nopCommerce, we use the good old ADO.Net for querying data. Since we are instructing the LLM to give us raw SQL, using ADO.Net is good enough. But it does not take into account the fact that nopCommerce now supports 3 databases - MS SQL Server, MySql and PostgreSQL. We can, of course, install ADO.Net connectors for MySql and PostgreSQL, but this is omitted in the tutorial to keep the scope of this tutorial within control.Overall, many error checking is omitted, but should be added if this code is intended to run in a production environment so as to ensure the stability and security of the app.

Displaying Results in the Front-end

Now that the backend is setup to provide the data querying capability, let's move to the front-end to complete the app.

Execution Sequence

When the "Run Report" button is clicked, an AJAX request is issued to the endpoint /Admin/Reporting/RunReport to retrieve the data. Note that we are making use of Tabulator.js's setData function to do the AJAX request for us.

For those that are not familiar with how Tabulator.js works, here's the sequence:

Clicking the button

#run-reportcalls Tabulator.js'ssetDatamethod to issue an AJAX request.userInputis read from thetextareaas part of the AJAX submission.When a response is received from the back-end's

RunReportmethod, Tabulator.js'sajaxResponsecallback function is used to extract the required data. At the same time, the textbox#sqlis also being populated with the generated SQL for reference.Tabulator.js's

autoColumnsfeature automatically and dynamically generates the columns based on the JSON being fed.

With that, it completes the sequence and the data is shown in Tabulator.js.

Let's try some fun queries next!

Onto Some Fun Business Intelligence Queries!

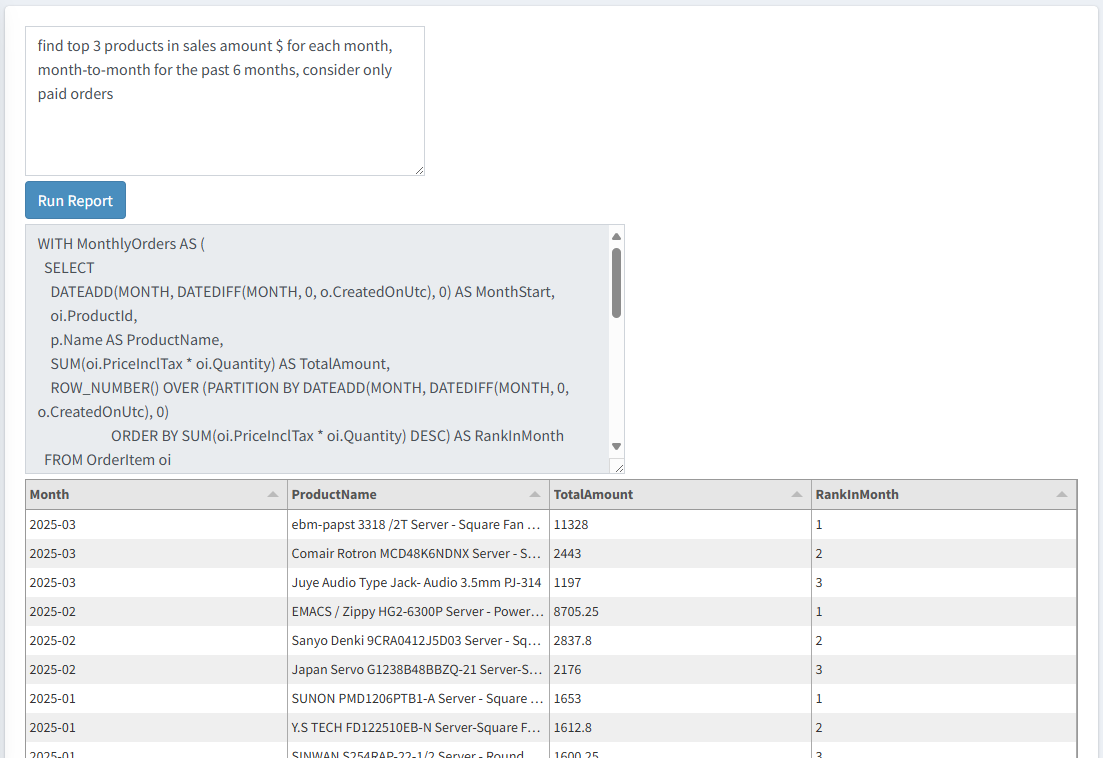

Top 3 products in sales amount for each month, for the past 6 months

find top 3 products in sales amount $ for each month, month-to-month for the past 6 months, consider only paid orders

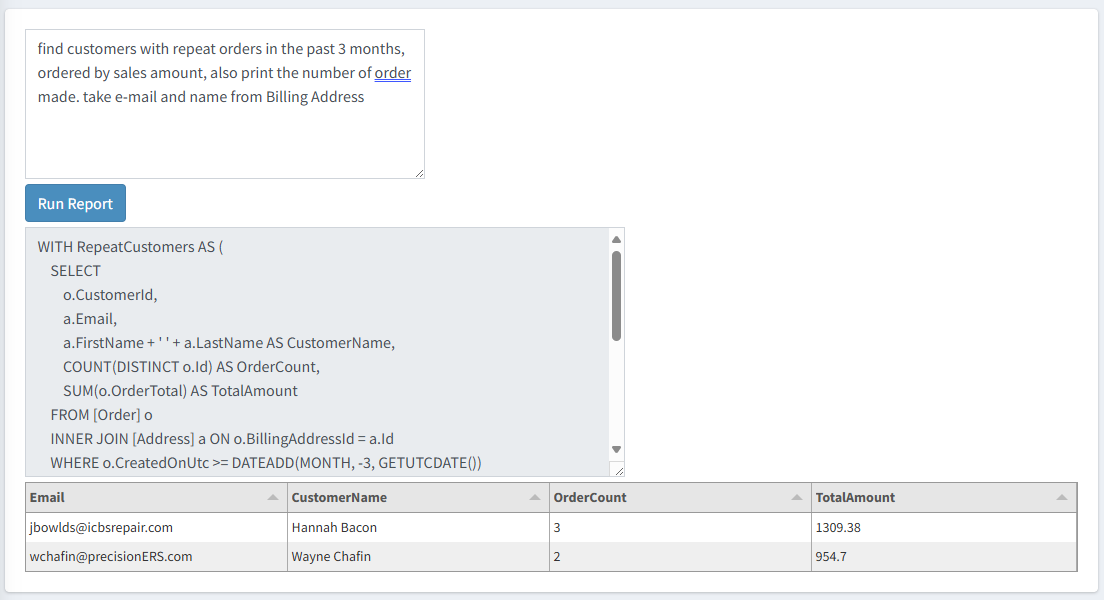

Customers with repeat orders in the past 3 months, ordered by sales amount

find customers with repeat orders in the past 3 months, ordered by sales amount, also print the number of order made.

Top 10 countries by sales amount in the last 6 months

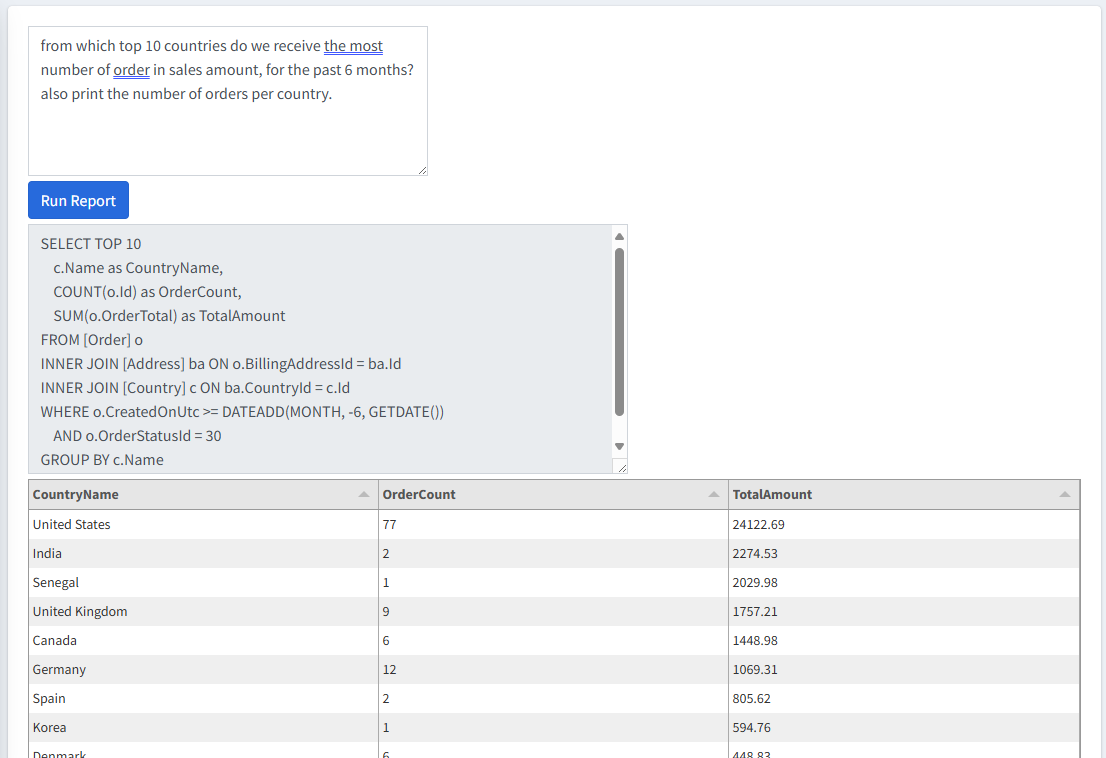

from which top 10 countries do we receive the most number of order in sales amount, for the past 6 months? also print the number of orders per country.

Number of products sold by price range

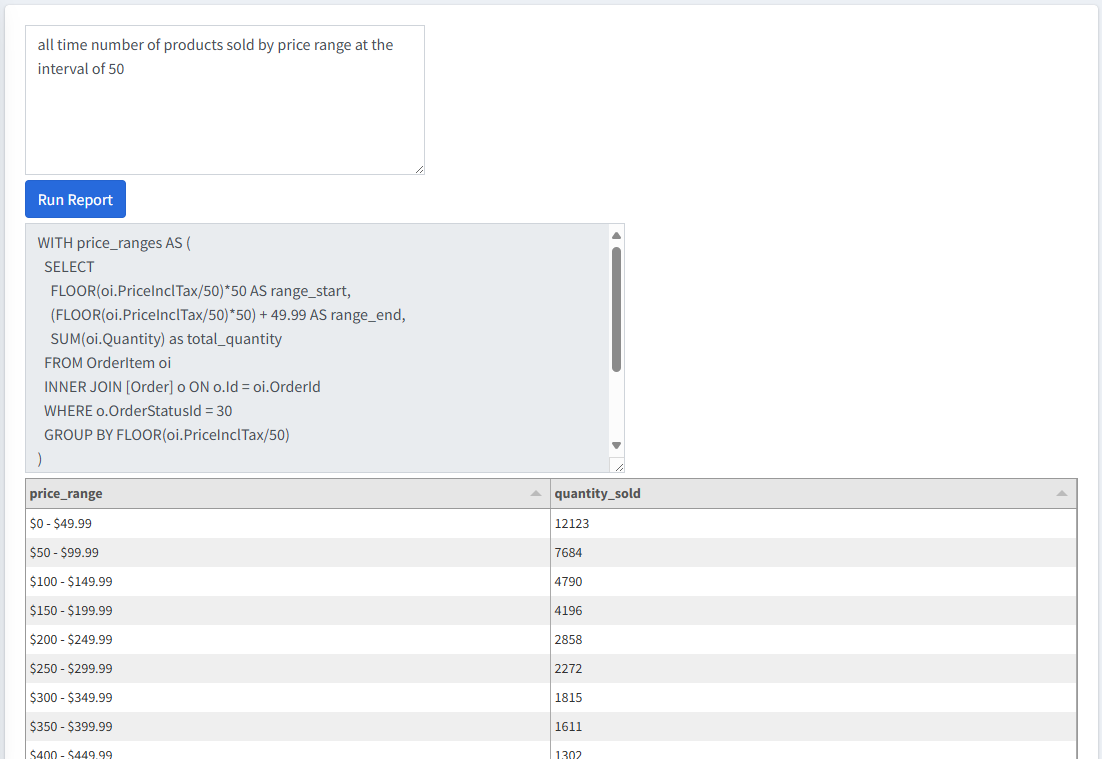

all time number of products sold by price range at the interval of 50

Conclusion

This tutorial shows that, with the help of LLMs like ChatGPT, creating a lightweight and accessible BI tool inside nopCommerce is entirely feasible. By combining natural language input, dynamic SQL generation, and real-time data rendering with Tabulator.js, even non-technical users can ask meaningful questions and receive actionable insights instantly.

Of course, the code presented here should be thoroughly reviewed and hardened to ensure it meets the necessary standards for security, performance, and stability in a production environment.

There are also many more features we can build on top of this foundation to enhance usability, flexibility, and analytical power:

Saving the generated SQL for repeat execution: This allows users to quickly re-run commonly used queries without needing to re-enter natural language prompts. A query history feature could also allow for organizing saved queries into folders or tagging them with metadata.

Displaying data differently depending on the data type: The system can intelligently choose the most appropriate visualization based on the structure and nature of the data. For example, if the result contains numeric summaries grouped by category, a bar chart might be automatically selected. If the data includes trends over time, a line chart could be rendered instead.

Scheduling and automation: Users could schedule queries to run at regular intervals and have the results emailed or exported automatically.

Drill-down support: Allow clicking into summary rows to see detailed breakdowns (e.g., clicking a sales total to see the underlying orders).

Charting integration: Supporting dynamic chart generation from result sets, such as bar charts for sales by category or pie charts for customer segmentation.

That said, this tutorial demonstrates that the core foundation of a powerful, LLM-driven BI tool can be set up with relative ease. With incremental improvements, such a system can evolve into a highly capable, cost-effective alternative to traditional BI platforms, natively integrated into your nopCommerce store.